Flowers

Image Classifier

The Flower_Recognisation data set is a well known data set used in Kaggle challenges. This blog post will discuss how to do a image classifier using CNN model with pytorch , as well as some additional contributions .

Kaggle dataset: https://www.kaggle.com/alxmamaev/flowers-recognition

The maximum amount of code has been referred from https://jovian.ai/mohanavignesh/flower-classificationand added Contribution Section at the end of the blog post.

What is an image classifier?

Image classification is where a computer can analyse an image and identify the ‘class’ the image falls under. (Or a probability of the image being part of a ‘class’.) A class is essentially a label, for instance, ‘daisy’, ‘rose’ and so on.

The image classification which we are going to work on is flowers dataset.

The classes provided by given dataset are:

1. dandelion

2. daisy

3. sunflower

4. rose

5. tulip

The total number of images present are 4317.

At first we have to extract the flower image dataset from kaggle

Follow the following commands to install the dataset.

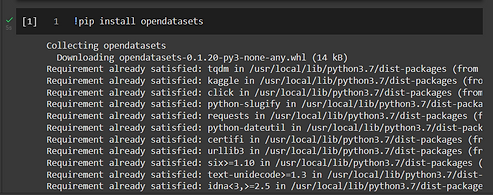

!pip install opendatasets

installing the library using pip.

.png)

Now try to install the opendatasets library and download the flower recognition dataset by using the following command opendatasets.download("< kaggle_dataset_link >")

Go to your kaggle account

go to settings -> accounts -> go to API section -> Create a new API Token

This allows you to download a json file . Open the JSON file and copy username , Key and paste it after running below code.

This will allow you to download the kaggle dataset directly from here itself.

_edited.jpg)

Importing all the required packages as per requirement.

_edited.jpg)

os.listdir() will help you display all the dataset

The classes available in flower dataset are

-

dandelion

-

daisy

-

sunflower

-

rose

-

tulip

.png)

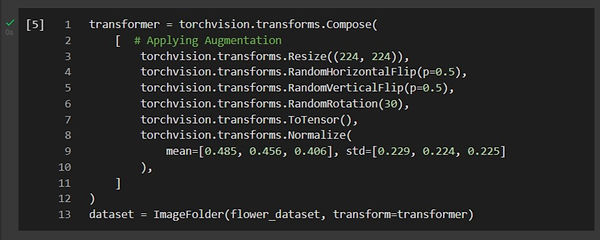

The transformers module is something which you can transform images in various ways. The torchvision.transforms.compose helps to combine and keeps all the transformations together. we can use transformers module to play with data accordingly for training common image transformations using pytorch.

_edited.jpg)

ToTensor

ToTensor converts any kind of input which is in the form numpy array or PIL image, it can be converted into a tensor format using ToTensor. Tensor is vector or matrix that represents all types of data. All values in tensor will have identical shape. It will be converted into (C x H x W) C - number of channels H - Image height W - Image Width

Resize

Resizes the given input image to a given size. We can convert it to tensor image and apply resize() transform if the image is neither PIL image nor a tensor image.

Normalize

Normalize transform does not support PIL image. We have to normalize the Tensor image with mean and standard deviation. mean (mean[1],...,mean[n]) and std:(std[1],...,std[n]) for n channels,this transform will normalize each channel of the input torch.*Tensor i.e., output[channel] = (input[channel] - mean[channel]) / std[channel] .

RandomHorizontalFlip

Horizontally flip the given image randomly with a given probability.(default value is 0.5)

RandomVerticalFlip

Vertical flip the given image randomly with a given probability.(default value is 0.5)

RandomRotation

Rotating the image by the angle.

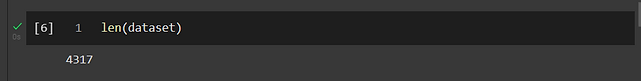

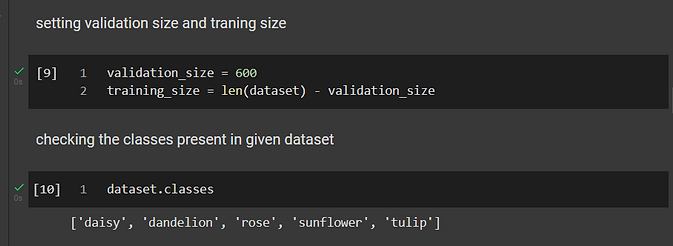

length of the dataset

len function shows you how many are there in particular given dataset

.png)

plt.imshow

It is a pyplot module which is extracted from matplotlib library. It helps to display data as an image. Along with this we are also printing label of the image by accessing the dataset classes. show_example is a self defined function which helps to display the image consisting of two parameters of img and label.

.png)

Call the above defined function to display the image from the dataset.

.png)

.png)

Now we are trying to split out given dataset into three parts

-

train

-

test

-

validation

random_split

we use random_split() function to split the given dataset.

the train and validation will split random from given dataset with the respective sizes. now we take validation again and split it again based on respective sizes into validation and test data. and then we try to display length of train, test and val.

.png)

.png)

.png)

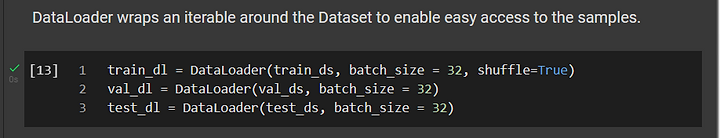

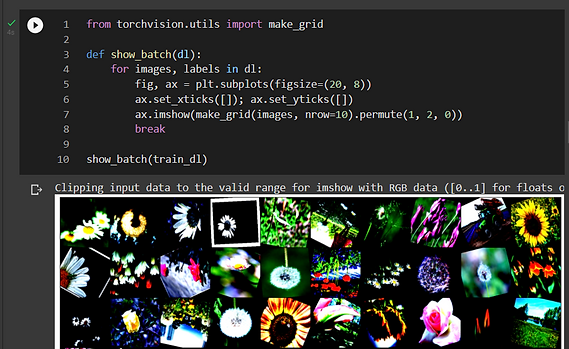

make_grid

we are extracting make_grid from utils module withing torchvision library. The main functionality of make_grid is to make a gride of images.

In the bellow code we have defined a function show_batch. This function helps to display the group of images.

we have used subplot function so that we can draw multiple plots in one figure.

set_xticks: it sets the x ticks with the list of ticks.

set_yticks: it sets the x ticks with the list of ticks.

we try to call the defined function by passinf training data as parameter.

.png)

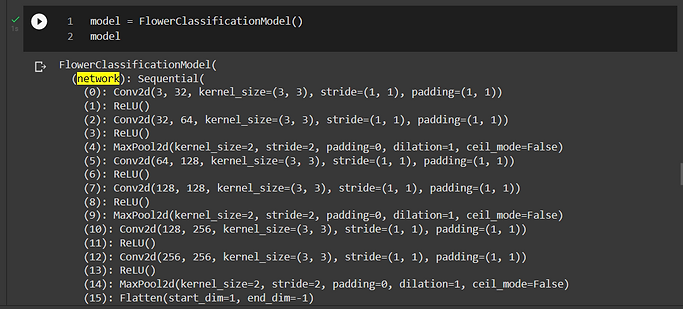

Convolutional Neural Network

This particular model is generally used in image recognition and process the pixel data. The CNN consits of input layer, output layer and hidden layer ( multiple convolutional layers, fully connected layers and normalization layers). This model is more effective and simpler to train.

cross_entropy

This calculates the cross entropy loss between input and target.

Conv2d

it renders 2D convolution to a given image which has several input planes.

ReLU

RECTIFIED LINEAR UNIT will be applied on each element.

MaxPool2d

It renders a 2D pooling for a given image which has several input planes.

nn.Sequential

calls a sequence of modules that allows treating the whole container as a single module, such that performing a transformation on the Sequential applies to each of the modules it stores.

nn.Flatten

Flattens a contiguous range of dims into a tensor. It is used with Sequential.

class FlowerClassificationModel(nn.Module):

def training_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

return loss

def __init__(self):

super().__init__()

self.network = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(2, 2), # output: 64 x 16 x 16

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(2, 2), # output: 128 x 8 x 8

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(2, 2), # output: 256 x 4 x 4

nn.Flatten(),

nn.Linear(256*28*28, 1024),

nn.ReLU(),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Linear(512, 5))

def forward(self, xb):

return self.network(xb)

def forward(self, xb):

return self.network(xb)

def validation_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

acc = accuracy(out, labels) # Calculate accuracy

return {'val_loss': loss.detach(), 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

epoch_loss = torch.stack(batch_losses).mean() # Combine losses

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean() # Combine accuracies

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch [{}], train_loss: {:.4f}, val_loss: {:.4f}, val_acc: {:.4f}".format(

epoch, result['train_loss'], result['val_loss'], result['val_acc']))

.png)

.png)

.png)

.png)

.png)

.png)

.png)

-

The above graph represents the accuracy of each epoch .

-

the accuracy of the above graph is 0.7115.

CONTRIBUTIONS

-

changed few values hyperparameters to increase and decrease accuracy of the image classifier.

-

I have also

-

Also understood the code and Documented .

REFERENCES

-

Reffered google for image classifier and watched tutorials on youtube to gain more knowledge on cnn model

-

https://jovian.ai/mohanavignesh/flower-classification/v/2?utm_source=embed